In the case of Linear Regression, the Cost function is –

But for Logistic Regression,

It will result in a non-convex cost function. But this results in cost function with local optima’s which is a very big problem for Gradient Descent to compute the global optima.

So, for Logistic Regression the cost function is

If y = 1

Cost = 0 if y = 1, hθ(x) = 1

But as,

hθ(x) -> 0

Cost -> Infinity

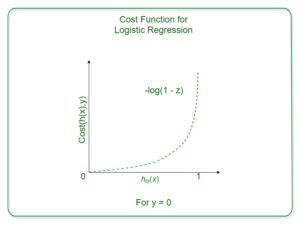

If y = 0

So,

To fit parameter θ, J(θ) has to be minimized and for that Gradient Descent is required.

Gradient Descent – Looks similar to that of Linear Regression but the difference lies in the hypothesis hθ(x)